This is the multi-page printable view of this section. Click here to print.

Operations

1 - Integrating AAF with AIS Kubernetes XNAT Deployment

Applying for AAF Integration ClientId and Secret

AAF have several services they offer which authenticate users, for example, Rapid Connect. We are interested in the AAF OIDC RP service. Please contact AAF Support via email at support@aaf.net.au to apply for a ClientId and Secret.

They will ask you these questions:

- The service’s redirect URL - a redirect URL based on an actual URL rather than IP address and must use HTTPS.

- A descriptive name for the service.

- The organisation name, which must be an AAF subscriber, of the service.

- Indicate the service’s purpose - development/testing/production-ready.

- Your Keybase account id to share the credentials securely.

For 1. This is extremely important and based on two options in the openid-provider.properties file:

siteUrlpreEstablishedRedirUri

We will use this example below (this is the correct syntax):

openid-provider.properties

siteUrl=https://xnat.example.com

preEstablishedRedirUri=/openid-login

In this case, the answer to 1 should be https://xnat.example.com/openid-login Submitting https://xnat.example.com will lead to a non functional AAF setup.

- Can be anything – preferably descriptive.

- Exactly what it says. Mostly the university name depending on organisation

- This is important as it will dictate the AAF Servers your service will authenticate against.

If it is a testing or development environment, you will use the following details:

openid.aaf.accessTokenUri=https://central.test.aaf.edu.au/providers/op/token

openid.aaf.userAuthUri=https://central.test.aaf.edu.au/providers/op/authorize

For production environments (notice no test in the URLs):

openid.aaf.accessTokenUri=https://central.aaf.edu.au/providers/op/token

openid.aaf.userAuthUri=https://central.aaf.edu.au/providers/op/authorize

For 5. Just go to https://keybase.io/ and create an account to provide to AAF support so you can receive the ClientId and ClientSecret securely.

Installing the AAF Plugin in a working XNAT environment

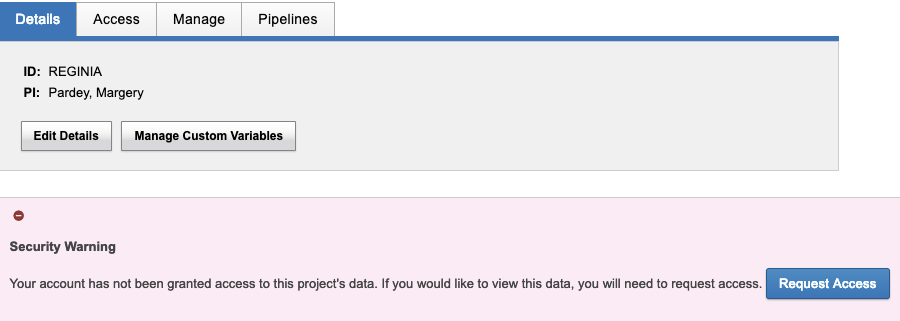

There have been long standing issues with the QCIF plugin that have been resolved by the AIS Deployment team – namely unable to access any projects – see image below.

This issue occurred regardless of project access permissions. You would receive this error message trying to access your own project!

AIS Deployment team created a forked version of the plugin which fixes this issue. You can view it here:

https://github.com/Australian-Imaging-Service/xnat-openid-auth-plugin

To deploy to XNAT, navigate to the XNAT home/ plugins folder on your XNAT Application Server – normally /data/xnat/home/plugins and then download. Assuming Linux:

wget https://github.com/Australian-Imaging-Service/xnat-openid-auth-plugin/releases/download/1.0.2/xnat-openid-auth-plugin-all-1.0.2.jar

Please note this was the latest version at the time of writing this document. Please check here to see if there have been updated versions:

https://github.com/Australian-Imaging-Service/xnat-openid-auth-plugin/releases

You now have xnat-openid-auth-plugin-all-1.0.2.jar in /data/xnat/home/plugins.

You now need the configuration file which will be (assuming previous location for XNAT Home directory):

/data/xnat/home/config/auth/openid-provider.properties

You will need to create this file.

Review this sample file and tailor to your needs:

I will provide an example filled out properties file with some caveats below.

Warning

All of the keys are case sensitive, incorrectly capitalised entries will result in non-working AAF integration!These need to be left as is

auth.method=openid

type=openid

provider.id=openid

visible=true

Set these values to false if you want an Admin to enable and verify the account before users are allowed to login - recommended

auto.enabled=false

auto.verified=false

Name displayed in the UI – not particularly important

name=OpenID Authentication Provider

Toggle username & password login visibility

disableUsernamePasswordLogin=false

List of providers that appear on the login page, see options below. In our case we only need aaf but you can have any openid enabled provider

enabled=aaf

Site URL - the main domain, needed to build the pre-established URL below. See notes at top of document

siteUrl=https://xnat.example.com

preEstablishedRedirUri=/openid-login

AAF ClientID and Secret – CASE SENSITIVE - openid.aaf.clientID for example would mean AAF plugin will not function These are fake details but an example – no “ (quotation marks) required.

openid.aaf.clientId=123jsdjd

openid.aaf.clientSecret=chahdkdfdhffkhf

The providers are covered at the top of the document

openid.aaf.accessTokenUri=https://central.test.aaf.edu.au/providers/op/token

openid.aaf.userAuthUri=https://central.test.aaf.edu.au/providers/op/authorize

You can find more details on the remaining values here:

https://github.com/Australian-Imaging-Service/xnat-openid-auth-plugin

openid.aaf.scopes=openid,profile,email

If the below is wrong the AAF logo will not appear on the login page and you won’t be able to login

openid.aaf.link=<p>To sign-in using your AAF credentials, please click on the button below.</p><p><a href="/openid-login?providerId=aaf"><img src="/images/aaf_service_223x54.png" /></a></p>

Flag that sets if we should be checking email domains

openid.aaf.shouldFilterEmailDomains=false

Domains below are allowed to login, only checked when shouldFilterEmailDomains is true

openid.aaf.allowedEmailDomains=example.com

Flag to force the user creation process, normally this should be set to true

openid.aaf.forceUserCreate=true

Flag to set the enabled property of new users, set to false to allow admins to manually enable users before allowing logins, set to true to allow access right away

openid.aaf.userAutoEnabled=false

Flag to set the verified property of new users – use in conjunction with auto.verified

openid.aaf.userAutoVerified=false

Property names to use when creating users

openid.aaf.emailProperty=email

openid.aaf.givenNameProperty=given_name

openid.aaf.familyNameProperty=family_name

If you create your openid-provider.properties file with the above information, tailored to your environment, along with the plugin:/data/xnat/home/plugins/xnat-openid-auth-plugin-all-1.0.2.jar

You should only need to restart Tomcat to enable login. This assumes you have a valid AAF organisation login.

Using AAF with the AIS Kubernetes Chart Deployment

The AIS Charts Helm template has all you need to setup a completely functional XNAT implementation in minutes, part of this is AAF integration.

Prerequisites:

• A functional HTTPS URL with valid SSL certificate for your Kubernetes cluster. See the top of this document for details to provide to AAF.

• A ClientId and Secret provided by AAF.

• A Load Balancer or way to connect externally to your Kubernetes using the functional URL with SSL certificate.

Before you deploy the Helm template, clone it via git here:

git clone https://github.com/Australian-Imaging-Service/charts.git

then edit the following file:charts/releases/xnat/charts/xnat-web/values.yaml

And update the following entries underneath openid:

preEstablishedRedirUri: "/openid-login"

siteUrl: ""

#List of providers that appear on the login page

providers:

aaf:

accessTokenUri: https://central.aaf.edu.au/providers/op/token

#accessTokenUri: https://central.test.aaf.edu.au/providers/op/token

userAuthUri: https://central.aaf.edu.au/providers/op/authorize

#userAuthUri: https://central.test.aaf.edu.au/providers/op/authorize

clientId: ""

clientSecret: ""

Comment out the Test or Production providers depending on which environment your XNAT will reside in. To use the example configuration from the previous configuration, the completed entries will look like this:

preEstablishedRedirUri: "/openid-login"

siteUrl: "https://xnat.example.com"

#List of providers that appear on the login page

providers:

aaf:

accessTokenUri: https://central.test.aaf.edu.au/providers/op/token

userAuthUri: https://central.test.aaf.edu.au/providers/op/authorize

clientId: "123jsdjd"

clientSecret: "chahdkdfdhffkhf"

You can now deploy your Helm template by following the README here: https://github.com/Australian-Imaging-Service/charts In order for this to work, you will need to point your domain name and SSL certificate to the Kubernetes xnat-web pod, which is outside of the scope of this document.

Troubleshooting

Most of the above documentation should remove the need for troubleshooting but a few things to bear in mind.

All of the openid-provider.properties file and the values.yaml file mentioned above for either existing XNAT deployments are CASE SENSITIVE. The entries must match exactly AAF won’t work.

If you get a 400 error message when redirecting from XNAT to AAF like so:

The ClientId entry is wrong. This happened before when the properties file had ClientId like this:

openid.aaf.clientIDrather than:

openid.aaf.clientIdYou can see client_id section is empty. This wrongly capitalised entry results in the clientId not be passed to the URL to redirect and a 400 error message.

Check the log files. The most useful log file for error messages is the Tomcat localhost logfile. On RHEL based systems, this can be found here (example logfile):

/var/log/tomcat7/localhost.2021-08-08.logYou can also check the XNAT logfiles, mostly here (depending on where XNAT Home is on your system):

/data/xnat/home/logs

2 - Autoscaling XNAT on Kubernetes with EKS

There are three types of autoscaling that Kubernetes offers:

Horizontal Pod Autoscaling

Horizontal Pod Autoscaling (HPA) is a technology that scales up or down the number of replica pods for an application based on resource limits specified in a values file.Vertical Pod Autoscaling

Vertical Pod Autoscaling (VPA) increases or decreases the resources to each pod when it gets to a certain percentage to help you best deal with your resources. After some testing this is legacy and HPA is preferred and also built into the Helm chart so we won’t be utilising this technology.Cluster-autoscaling

Cluster-autoscaling is where the Kubernetes cluster itself spins up or down new Nodes (think EC2 instances in this case) to handle capacity.

You can’t use HPA and VPA together so we will use HPA and Cluster-Autoscaling.

Prerequisites

- Running Kubernetes Cluster and XNAT Helm Chart AIS Deployment

- AWS Application Load Balancer (ALB) as an Ingress Controller with some specific annotations

- Resources (requests and limits) need to specified in your values file

- Metrics Server

- Cluster-Autoscaler

You can find more information on applying ALB implementation for the AIS Helm Chart deployment in the ALB-Ingress-Controller document in this repo, so will not be covering that here, save to say there are some specific annotations that are required for autoscaling to work effectively.

Specific annotations required:

alb.ingress.kubernetes.io/target-group-attributes: "stickiness.enabled=true,stickiness.lb_cookie.duration_seconds=1800,load_balancing.algorithm.type=least_outstanding_requests"

alb.ingress.kubernetes.io/target-type: ip

Let’s breakdown and explain the sections.

Change the stickiness of the Load Balancer:

It is important to set a stickiness time on the load balancer. This forces you to the same pod all the time and retains your session information.

Without stickiness, after logging in, the Database thinks you have logged but the Load Balancer can alternate which pod you go to. The session details are kept on each pod so the new pod thinks you aren’t logged in and keeps logging you out all the time. Setting stickiness time reasonably high – say 30 minutes, can get round this.

stickiness.enabled=true,stickiness.lb_cookie.duration_seconds=1800

Change the Load Balancing Algorithm for best performance:

load_balancing.algorithm.type=least_outstanding_requests

Change the Target type:

Not sure why but if target-type is set to instance and not ip, it disregards the stickiness rules.

alb.ingress.kubernetes.io/target-type: ip

Resources (requests and limits) need to specified in your values file

In order for HPA and Cluster-autoscaling to work, you need to specify resources - requests and limits, in the AIS Helm chart values file, or it won’t know when to scale.

This makes sense because how can you know when you are running out of resources to start scaling up if you don’t know what your resources are to start with?

In your values file add the following lines below the xnat-web section (please adjust the CPU and memory to fit with your environment):

resources:

limits:

cpu: 1000m

memory: 3000Mi

requests:

cpu: 1000m

memory: 3000Mi

You can read more about what this means here:

https://kubernetes.io/docs/tasks/configure-pod-container/assign-cpu-resource/

From my research with HPA, I discovered a few important facts.

- Horizontal Pod Autoscaler doesn’t care about limits, it bases autoscaling on requests. Requests are meant to be the minimum needed to safely run a pod and limits are the maximum. However, this is completely irrelevant for HPA as it ignores the limits altogether so I specify the same resources for requests and limits. See this issue for more details:

https://github.com/kubernetes/kubernetes/issues/72811

- XNAT is extremely memory hungry, and any pod will use approximately 750MB of RAM without doing anything. This is important as when the requests are set below that, you will have a lot of pods scale up, then scale down and no consistency for the user experience. This will play havoc with user sessions and annoy everyone a lot. Applications - specifically XNAT Desktop can use a LOT of memory for large uploads (I have seen 12GB RAM used on an instance) so try and specify as much RAM as you can for the instances you have. In the example above I have specified 3000MB of RAM and 1 vCPU. The worker node instance has 4 vCPUs and 4GB. You would obviously use larger instances if you can. You will have to do some testing to work out the best Pod to Instance ratio for your environment.

Metrics Server

Download the latest Kubernetes Metrics server yaml file. We will need to edit it before applying the configuration or HPA won’t be able to see what resources are being used and none of this will work.

wget https://github.com/kubernetes-sigs/metrics-server/releases/latest/download/components.yaml

Add the following line:

- --kubelet-insecure-tls

to here:

spec:

containers:

- args:

Completed section should look like this:

spec:

containers:

- args:

- --kubelet-insecure-tls

- --kubelet-preferred-address-types=InternalIP,ExternalIP

- --cert-dir=/tmp

- --secure-port=443

- --kubelet-use-node-status-port

- --metric-resolution=15s

Now apply it to your Cluster:

k -nkube-system apply -f components.yaml

Congratulations - you now have an up and running Metrics server.

You can read more about Metrics Server here:

https://github.com/kubernetes-sigs/metrics-server

Cluster-Autoscaler

There are quite a lot of ways to use the Cluster-autoscaler - single zone node clusters deployed in single availability zones (no AZ redundancy), single zone node clusters deployed in multiple Availability zones or single Cluster-autoscalers that deploy in multiple Availability Zones. In this example we will be deploying the autoscaler in multiple Availability Zones (AZ’s).

In order to do this, a change needs to be made to the StorageClass configuration used.

Delete whatever StorageClasses you have and then recreate them changing the VolumeBindingMode. At a minimum you will need to change the GP2 / EBS StorageClass VolumeBindingMode but if you are using a persistent volume for archive / prearchive, that will also need to be updated.

Change this:

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

name: gp2

annotations:

storageclass.kubernetes.io/is-default-class: "true"

provisioner: kubernetes.io/aws-ebs

volumeBindingMode: Immediate

parameters:

fsType: ext4

type: gp2

to this:

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

name: gp2

annotations:

storageclass.kubernetes.io/is-default-class: "true"

provisioner: kubernetes.io/aws-ebs

volumeBindingMode: WaitForFirstConsumer

parameters:

fsType: ext4

type: gp2

The run the following commands (assuming the file above is called storageclass.yaml):

kubectl delete sc --all

kubectl apply -f storageclass.yaml

This stops pods trying to bind to volumes in different AZ’s.

You can read more about this here:

https://aws.amazon.com/blogs/containers/amazon-eks-cluster-multi-zone-auto-scaling-groups/

Relevant section:

If you need to run a single ASG spanning multiple AZs and still need to use EBS volumes you may want to change the default VolumeBindingMode to WaitForFirstConsumer as described in the documentation here. Changing this setting “will delay the binding and provisioning of a PersistentVolume until a pod using the PersistentVolumeClaim is created.” This will allow a PVC to be created in the same AZ as a pod that consumes it.

If a pod is descheduled, deleted and recreated, or an instance where the pod was running is terminated then WaitForFirstConsumer won’t help because it only applies to the first pod that consumes a volume. When a pod reuses an existing EBS volume there is still a chance that the pod will be scheduled in an AZ where the EBS volume doesn’t exist.

You can refer to AWS documentation for how to install the EKS Cluster-autoscaler:

https://docs.aws.amazon.com/eks/latest/userguide/cluster-autoscaler.html

This is specific for your deployment IAM roles, clusternames etc, so will not specified here.

Configure Horizontal Pod Autoscaler

Add the following lines into your values file under the xnat-web section:

autoscaling:

enabled: true

minReplicas: 2

maxReplicas: 100

targetCPUUtilizationPercentage: 80

targetMemoryUtilizationPercentage: 80

Tailor it your own environment. this will create 2 replicas (pods) at start up, up to a limit of 100 replicas, and will scale up pods when 80% CPU and 80% Memory are utilised - read more about that again here:

https://kubernetes.io/docs/tasks/configure-pod-container/assign-cpu-resource/

This is the relevant parts of my environment when running the get command:

k -nxnat get horizontalpodautoscaler.autoscaling/xnat-xnat-web

NAME REFERENCE TARGETS MINPODS MAXPODS REPLICAS AGE

xnat-xnat-web StatefulSet/xnat-xnat-web 34%/80%, 0%/80% 2 100 2 3h29m

As you can see 34% of memory is used and 0% CPU. Example of get command for pods - no restarts and running nicely.

k -nxnat get pods

NAME READY STATUS RESTARTS AGE

pod/xnat-xnat-web-0 1/1 Running 0 3h27m

pod/xnat-xnat-web-1 1/1 Running 0 3h23m

Troubleshooting

Check Metrics server is working (assuming in the xnat namespace) and see memory and CPU usage:

kubectl top pods -nxnat

kubectl top nodes

Check Cluster-Autoscaler logs:

kubectl logs -f deployment/cluster-autoscaler -n kube-system

Check the HPA:

kubectl -nxnat describe horizontalpodautoscaler.autoscaling/xnat-xnat-web

3 - Docker Swarm with XNAT

Setting up Docker Swarm

A complete explanation of how to setup Docker Swarm is outside the scope of this document but you can find some useful articles here:

https://scalified.com/2018/10/08/building-jenkins-pipelines-docker-swarm/

https://docs.docker.com/engine/swarm/swarm-tutorial/create-swarm/

https://docs.docker.com/engine/swarm/ingress/

Setting up with AWS:

https://semaphoreci.com/community/tutorials/bootstrapping-a-docker-swarm-mode-cluster

Pipelines

XNAT uses pipelines to perform various different processes - mostly converting image types to other image types (DICOM to NIFTI for example).

In the past this was handled on the instance as part of the XNAT program, then as a docker server on the instance and finally, externally as an external docker server, either directly or using Docker swarm.

XNAT utilises the Container service which is a plugin to perform docker based pipelines. In the case of Kubernetes, docker MUST be run externally so Docker swarm is used as it provides load balancing.

Whilst the XNAT team work on replacing the Container service on Docker Swarm with a Kubernetes based Container service, Docker swarm is the most appropriate stop gap option.

Prerequisites

You will require the Docker API endpoint opened remotely so that XNAT can access and send pipeline jobs to it. For security, this should be done via HTTPS (not HTTP).

Standard port is TCP 2376. With Docker Swarm enabled you can send jobs to any of the manager or worker nodes and it will automatically internally load balance. I chose to use the Manager node’s IP and pointed DNS to it.

You should lock access to port 2376 to the Kubernetes XNAT subnets only using firewalls or Security Group settings. You can also use an external Load balancer with certificates which maybe preferred.

If the certificates are not provided by a known CA, you will need to add the certificates (server, CA and client) to your XNAT container build so choosing a proper certificate from a known CA will make your life easier.

If you do use self signed certificates, you will need create a folder, add the certificates and then specify that folder in the XNAT GUI > Administer > Plugin Settings > Container Server Setup > Edit Host Name. In our example case:

Certificate Path: /usr/local/tomcat/certs

Access from the Docker Swarm to the XNAT shared filesystem - at a minimum Archive and build. The AIS Helm chart doesn’t have /data/xnat/build setup by default but without this Docker Swarm can’t write the temporaray files it needs and fails.

Setup DNS and external certificates

Whether you will need to create self signed certificates or public CA verified ones, you will need a fully qualified domain name to create them against.

I suggest you set an A record to point to the Manager node IP address, or a Load Balancer which points to all nodes. Then create the certificates against your FQDN - e.g. swarm.example.com.

Allow remote access to Docker API endpoint on TCP 2376

To enable docker to listen on port 2376 edit the service file or create /etc/docker/daemon.json.

We will edit the docker service file. Remember to specify whatever certificates you will be using in here. They will be pointing to your FQDN - in our case above, swarm.example.com.

systemctl edit docker

[Service]

ExecStart=

ExecStart=/usr/bin/dockerd -H tcp://0.0.0.0:2376 --tlsverify --tlscacert /root/.docker/ca.pem --tlscert /root/.docker/server-cert.pem -tlskey /root/.docker/server-key.pem -H unix:///var/run/docker.sock

systemctl restart docker

Repeat on all nodes. Docker Swarm is now listening remotely on TCP 2376.

Secure access to TCP port 2376

Add a firewall rule to only allow access to TCP port 2376 from the Kubernetes subnets.

Ensure Docker Swarm nodes have access to the XNAT shared filesystem

Without access to the Archive shared filesystem Docker cannot run any pipeline conversions. This seems pretty obvious. Less obvious however is that the XNAT Docker Swarm requires access to the Build shared filesystem to run temporary jobs before writing back to Archive upon completion.

This presents a problem as the AIS Helm Chart does not come with a persistent volume for the Build directory, so we need to create one.

Create a volume outside the Helm Chart and then present it in your values file. In this example I created a custom class. Make sure accessMode is ReadWriteMany so Docker Swarm nodes can access.

volumes:

build:

accessMode: ReadWriteMany

mountPath: /data/xnat/build

storageClassName: "custom-class"

volumeMode: Filesystem

persistentVolumeReclaimPolicy: Retain

persistentVolumeClaim:

claimName: "build-xnat-xnat-web"

size: 10Gi

You would need to create the custom-class storageclass and apply it first or the volume won’t be created. In this case, create a file - storageclass.yaml and add the followinng contents:

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

name: custom-class

provisioner: kubernetes.io/no-provisioner

volumeBindingMode: WaitForFirstConsumer

You can then apply it:

kubectl apply -f storageclass.yaml

Of course you may want to use an existing Storage Class so this maybe unnecessary, it is just an example.

Apply the Kubernetes volume file first and then apply the Helm chart and values file. You should now see something like the following:

kubectl get -nxnat pvc,pv

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

persistentvolumeclaim/archive-xnat-xnat-web Bound archive-xnat-xnat-web 10Gi RWX custom-class 5d1h

persistentvolumeclaim/build-xnat-xnat-web Bound build-xnat-xnat-web 10Gi RWX custom-class 5d1h

persistentvolumeclaim/cache-xnat-xnat-web-0 Bound pvc-b5b72b92-d15f-4a22-9b88-850bd726d1e2 10Gi RWO gp2 5d1h

persistentvolumeclaim/prearchive-xnat-xnat-web Bound prearchive-xnat-xnat-web 10Gi RWX custom-class 5d1h

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE

persistentvolume/archive-xnat-xnat-web 10Gi RWX Retain Bound xnat/archive-xnat-xnat-web custom-class 5d1h

persistentvolume/build-xnat-xnat-web 10Gi RWX Retain Bound xnat/build-xnat-xnat-web custom-class 5d1h

persistentvolume/prearchive-xnat-xnat-web 10Gi RWX Retain Bound xnat/prearchive-xnat-xnat-web custom-class 5d1h

persistentvolume/pvc-b5b72b92-d15f-4a22-9b88-850bd726d1e2 10Gi RWO Delete Bound xnat/cache-xnat-xnat-web-0 gp2 5d1h

As you can see, the build directory is now a mounted volume. You are now ready to mount the volumes on the Docker swarm nodes.

Depending how you presented your shared filesystem, just create the directories on the Docker swarm nodes and manager (if the manager is also a worker), add to /etc/fstab and mount the volumes.

To make your life easier use the same file structure for the mounts - i.e build volume mounted in /data/xnat/build and archive volume mounted in /data/xnat/archive. If you don’t do this you will need to specify the Docker swarm mounted XNAT directories in the XNAT GUI.

Add your Docker Swarm to XNAT Plugin Settings

You can read about the various options in the official XNAT documentation on their website here:

https://wiki.xnat.org/container-service/installing-and-enabling-the-container-service-in-xnat-126156821.html

https://wiki.xnat.org/container-service/configuring-a-container-host-126156926.html

In the XNAT GUI, go to Administer > Plugin Settings > Container Server Setup and under Docker Server setup select > New Container host.

In our above example, for host name you would select swarm.example.com, URL would be https://swarm.example.com:2376 and certificate path would be /usr/local/tomcat/certs. As previously mentioned, it is desirable to have public CA and certificates to avoid the needs for specifying certificates at all here.

Select Swarm Mode to “ON”.

You will need to select Path Translation if you DIDN’T mount the Docker swarm XNAT directories in the same place.

The other options are optional.

Once applied make sure that Status is “Up”. The Image hosts section should also now have a status of Up.

You can now start adding your Images & Commands in the Administer > Plugin Settings > Images & Commands section.

Troubleshooting

If you have configured docker swarm to listen on port 2376 but status says down, firstly check you can telnet or netcat to the port first locally, then remotely. From one of the nodes:

nc -zv 127.0.0.1 2376

or

telnet 127.0.0.1 2376

If you can, try remotely from a location that has firewall ingress access. In our example previously, try:

nc -zv swarm.example.com 2376

telnet swarm.example.com 2376

Make sure the correct ports are open and accessible on the Docker swarm manager:

The network ports required for a Docker Swarm to function correctly are:

TCP port 2376 for secure Docker client communication. This port is required for Docker Machine to work. Docker Machine is used to orchestrate Docker hosts.

TCP port 2377. This port is used for communication between the nodes of a Docker Swarm or cluster. It only needs to be opened on manager nodes.

TCP and UDP port 7946 for communication among nodes (container network discovery).

UDP port 4789 for overlay network traffic (container ingress networking).

Make sure docker service is started on all docker swarm nodes.

If Status is set to Up and the container automations are failing, confirm the archive AND build shared filesystems are properly mounted on all servers - XNAT and Docker swarm. A Failed (Rejected) status for a pipeline is likely due to this error.

In this case, as a service can’t be created you won’t have enough time to see the service logs with the usual:

docker service ls

command followed by looking at the service in question, so stop the docker service on the Docker swarm node and start in the foreground, using our service example above:

dockerd -H tcp://0.0.0.0:2376 --tlsverify --tlscacert /root/.docker/ca.pem --tlscert /root/.docker/server-cert.pem --tlskey /root/.docker/server-key.pem -H unix:///var/run/docker.sock

Then upload some dicoms and watch the processing run in the foreground.

Docker Swarm admin guide:

4 - External PGSQL DB Connection

Connecting AIS XNAT Helm Deployment to an External Postgresql Database

By default, the AIS XNAT Helm Deployment creates a Postgresql database in a separate pod to be run locally on the cluster.

If the deployment is destroyed the data in the database is lost. This is fine for testing purposes but unsuitable for a production environment.

Luckily a mechanism was put into the Helm template to allow connecting to an External Postgresql Database.

Updating Helm charts values files to point to an external Database

Firstly, clone the AIS Charts Helm template:

git clone https://github.com/Australian-Imaging-Service/charts.git

values-dev.yaml

This file is located in charts/releases/xnat

Current default configuration:

global:

postgresql:

postgresqlPassword: "xnat"

postgresql:

enabled: true

postgresqlExternalName: ""

postgresqlExternalIPs:

- 139.95.25.8

- 130.95.25.9

these lines:

postgresql: enabled: true

Needs to be changed to false to disable creation of the Postgresql pod and create an external database connection.

The other details are relatively straightforward - Generally you would only specify either:postgresqlExternalName or postgresqlExternalIPspostgresqlPassword will be your database user password.

An example configuration using a sample AWS RDS instance would look like this:

global:

postgresql:

postgresqlPassword: "yourpassword"

postgresql:

enabled: false

postgresqlExternalName: "xnat.randomstring.ap-southeast-2.rds.amazonaws.com"

Top level values.yaml

This file is also located in charts/releases/xnat

Current default configuration:

global:

postgresql:

postgresqlDatabase: "xnat"

postgresqlUsername: "xnat"

#postgresqlPassword: ""

#servicePort: ""

postgresql:

enabled: true

postgresqlExternalName: ""

postgresqlExternalIPs: []

An example configuration using a sample AWS RDS instance would look like this:

global:

postgresql:

postgresqlDatabase: "yourdatabase"

postgresqlUsername: "yourusername"

postgresqlPassword: "yourpassword"

postgresql:

enabled: false

postgresqlExternalName: "xnat.randomstring.ap-southeast-2.rds.amazonaws.com"

Please change the database, username, password and External DNS (or IP) details to match your environment.

xnat-web values.yaml

This file is also located in charts/releases/xnat/charts/xnat-web

Current default configuration:

postgresql:

postgresqlDatabase: "xnat"

postgresqlUsername: "xnat"

postgresqlPassword: "xnat"

Change to match your environment as with the other values.yaml.

You should now be able to connect your XNAT application Kubernetes deployment to your external Postgresql DB to provide a suitable environment for production.

For more details about deployment have a look at the README.md here:https://github.com/Australian-Imaging-Service/charts/tree/main/releases/xnat

Creating an encrypted connection to an external Postgresql Database

The database connection string for XNAT is found in the XNAT home directory - usually/data/xnat/home/config/xnat-conf.properties

By default the connection is unencrypted. If you wish to encrypt this connection you must append to the end of the Database connection string.

Usual string:datasource.url=jdbc:postgresql://xnat-postgresql/yourdatabase

Options:

| Option | Description |

|---|---|

ssl=true | use SSL encryption |

sslmode=require | require SSL encryption |

sslfactory=org.postgresql.ssl.NonValidatingFactory | Do not require validation of Certificate Authority |

The last option is useful as otherwise you will need to import the CA cert into your Java keystone on the docker container.

This means updating and rebuilding the XNAT docker image before being deployed to the Kubernetes Pod and this can be impractical.

Complete string would look like this ( all on one line):datasource.url=jdbc:postgresql://xnat-postgresql/yourdatabase?ssl=true&sslmode=require&sslfactory=org.postgresql.ssl.NonValidatingFactory

Update your Helm Configuration:

Update the following line in charts/releases/xnat/charts/xnat-web/templates/secrets.yaml from:

datasource.url=jdbc:postgresql://{{ template "xnat-web.postgresql.fullname" . }}/{{ template "xnat-web.postgresql.postgresqlDatabase" . }}

to:

datasource.url=jdbc:postgresql://{{ template "xnat-web.postgresql.fullname" . }}/{{ template "xnat-web.postgresql.postgresqlDatabase" . }}?ssl=true&sslmode=require&sslfactory=org.postgresql.ssl.NonValidatingFactory

Then deploy / redeploy.

It should be noted that the Database you are connecting to needs to be encrypted in the first place for this to be successful.

This is outside the scope of this document.

5 - Logging With EFK

EFK centralized logging collecting and monitoring

For AIS deployment, we use EFK stack on Kubernetes for log aggregation, monitoring and anyalysis. EFK is a suite of 3 different tools combining Elasticsearch, Fluentd and Kibana.

Elasticsearch nodes form a cluster as the core. You can run single node Elasticsearch. However, a high availablity Elasticsearch cluster requires 3 master nodes as a minimum. If there is one node fails, the Elasticsearch cluster still functions and can self heal.

Kibana instance is used as the visualisation tool for users to interact with the Elasticsearch cluster.

Fluentd is used as the log collector.

In the following guide, we leverage Elastic and Fluentd’s official Helm charts before using Kustomize to customize other required K8s resources.

Creating a new namespace for EFK

$ kubectl create ns efk

Add official Helm repos

For both Elasticsearch and Kibana:

$ helm repo add elastic https://helm.elastic.co

As of this writing, the latest helm repo supports Elasticsearch 7.17.3. It doesn’t work with the latest Elasticsearch v8.3 yet.

For Fluentd:

$ helm repo add fluent https://fluent.github.io/helm-charts

Install Elaticsearch

Adhere to the Elasticsearch security principles, all traffic between nodes in Elasticsearch cluster and traffic between the clients to the cluster needs to be encrypted. You use self signed certicate in this guide.

Generating self signed CA and certificates

- Below we use elasticsearch-certutil to generate password protected self signed CA and certificates, then use openssl tool to convert it to pem formatted certificate

$ docker rm -f elastic-helm-charts-certs || true

$ rm -f elastic-certificates.p12 elastic-certificate.pem elastic-certificate.crt elastic-stack-ca.p12 || true

$ docker run --name elastic-helm-charts-certs -i -w /tmp docker.elastic.co/elasticsearch/elasticsearch:7.16.3 \

/bin/sh -c " \

elasticsearch-certutil ca --out /tmp/elastic-stack-ca.p12 --pass 'Changeme' && \

elasticsearch-certutil cert --name security-master --dns security-master --ca /tmp/elastic-stack-ca.p12 --pass 'Changeme' --ca-pass 'Changeme' --out /tmp/elastic-certificates.p12" && \

docker cp elastic-helm-charts-certs:/tmp/elastic-stack-ca.p12 ./ && \

docker cp elastic-helm-charts-certs:/tmp/elastic-certificates.p12 ./ && \

docker rm -f elastic-helm-charts-certs && \

openssl pkcs12 -nodes -passin pass:'Changeme' -in elastic-certificates.p12 -out elastic-certificate.pem

openssl pkcs12 -nodes -passin pass:'Changeme' -in elastic-stack-ca.p12 -out elastic-ca-cert.pem

- Convert the generated CA and certificates to based64 encoded format. These will be used to create the secrets in K8s. Alternatively, you can use kubectl to create the secrets directly

$ base64 -i elastic-certificates.p12 -o elastic-certificates-base64

$ base64 -i elastic-stack-ca.p12 -o elastic-stack-ca-base64

- Generate base64 encoded format for passwords for keystore and truststore.

$ echo -n Changeme | base64 > store-password-base64

Create Helm custom values file elasticsearch.yml

- Creating 3 master nodes Elasticsearch cluster named “elasticsearch”.

clusterName: elasticsearch

replicas: 3

minimumMasterNodes: 2

- Specify the compute resources you allocate to Elasticsearch pod

resources:

requests:

cpu: "1000m"

memory: "2Gi"

limits:

cpu: "1000m"

memory: "2Gi"

- Specify the password for the default super user ’elastic'

secret:

enabled: false

password: Changeme

- Specify the protocol used for readniess probe. Use https for all traffic to the cluster on encypted link

protocol: https

- Disable the SSL certificate auto creation, we’ll use self signed certificate created earlier

createCert: false

- Configuration for the volumeClaimTemplate for Elasticsearch statefulset. A customised storage class ’es-ais’ will be defined by Kustomize

volumeClaimTemplate:

accessModes: ["ReadWriteMany"]

resources:

requests:

storage: 50Gi

storageClassName: es-ais

- Mount the secret

secretMounts:

- name: elastic-certificates

secretName: elastic-certificates

path: /usr/share/elasticsearch/config/certs

- Add configuration file elasticsearch.yaml. Enable transport TLS for internode encrypted communication and HTTP TLS for client encryped communication. Previously generated certificates are used, they are passed in from the mounted Secrets

esConfig:

elasticsearch.yml: |

xpack.security.enabled: true

xpack.security.transport.ssl.enabled: true

xpack.security.transport.ssl.verification_mode: certificate

xpack.security.transport.ssl.client_authentication: required

xpack.security.transport.ssl.keystore.path: /usr/share/elasticsearch/config/certs/elastic-certificates.p12

xpack.security.transport.ssl.truststore.path: /usr/share/elasticsearch/config/certs/elastic-certificates.p12

xpack.security.http.ssl.enabled: true

xpack.security.http.ssl.keystore.path: /usr/share/elasticsearch/config/certs/elastic-certificates.p12

- Map secrets into the keystore

keystore:

- secretName: transport-ssl-keystore-password

- secretName: transport-ssl-truststore-password

- secretName: http-ssl-keystore-password

- Supply extra environment varialbes.

extraEnvs:

- name: "ELASTIC_PASSWORD"

value: Changeme

Kustomize for Elasticsearch

- Create Kustomize file kustomization.yaml

apiVersion: kustomize.config.k8s.io/v1beta1

kind: Kustomization

resources:

- all.yaml

- storageclass.yaml

- secrets.yaml

- Create storageclass.yaml as referenced above. Below is the example when using AWS EFS as the persistent storage. You can adjust to suit your storage infrastructure.

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

name: es-ais

provisioner: efs.csi.aws.com

mountOptions:

- tls

parameters:

provisioningMode: efs-ap

fileSystemId: YourEFSFileSystemId

directoryPerms: "1000"

- Create secrets.yaml as referenced. Secrets created are used in the custom values file

apiVersion: v1

data:

elastic-certificates.p12: CopyAndPasteValueOf-elastic-certificates-base64

kind: Secret

metadata:

name: elastic-certificates

namespace: efk

type: Opaque

---

apiVersion: v1

data:

xpack.security.transport.ssl.keystore.secure_password: CopyAndPasteValueOf-store-password-base64

kind: Secret

metadata:

name: transport-ssl-keystore-password

namespace: efk

type: Opaque

---

apiVersion: v1

data:

xpack.security.transport.ssl.truststore.secure_password: CopyAndPasteValueOf-store-password-base64

kind: Secret

metadata:

name: transport-ssl-truststore-password

namespace: efk

type: Opaque

---

apiVersion: v1

data:

xpack.security.http.ssl.keystore.secure_password: CopyAndPasteValueOf-store-password-base64

kind: Secret

metadata:

name: http-ssl-keystore-password

namespace: efk

type: Opaque

Install Elasticsearch Helm chart

Change to where your Kustomize directory for Elasticsearch and run

$ helm upgrade -i -n efk es elastic/elasticsearch -f YourCustomValueDir/elasticsearch.yml --post-renderer ./kustomize

Wait till you will see all elasticsearch pods are in “running” status

$ kubectl get po -n efk -l app=elasticsearch-master

Install Kibana

Kibana enables the visual analysis of data from Elasticsearch indecies. In this guide, we use single instance.

Create Helm custom values file kibana.yaml

- Specify the URL to connect to Elasticsearch. We use the service name and port configured in Elaticsearch

elasticsearchHosts: "https://elasticsearch-master:9200"

- Specify the protocol for Kibana’s readiness check

protocol: https

- Add below kibana.yml configuration file that enables Kinana to talk to Elasticsearch on encrypted connection. For xpack.security.encryptionKey, you can use any text string that is at least 32 characters. Certificates are mounted from the secret resource

kibanaConfig:

kibana.yml: |

server.ssl:

enabled: true

key: /usr/share/kibana/config/certs/elastic-certificate.pem

certificate: /usr/share/kibana/config/certs/elastic-certificate.pem

xpack.security.encryptionKey: Changeme

elasticsearch.ssl:

certificateAuthorities: /usr/share/kibana/config/certs/elastic-ca-cert.pem

verificationMode: certificate

elasticsearch.hosts: https://elasticsearch-master:9200

- Supply PEM formated Elastic certificate. These certificates will be used in kibana.yml in previous step

secretMounts:

- name: elastic-certificates-pem

secretName: elastic-certificates-pem

path: /usr/share/kibana/config/certs

- Configure extra environment variables to pass to Kibana container on starting up.

extraEnvs:

- name: "KIBANA_ENCRYPTION_KEY"

valueFrom:

secretKeyRef:

name: kibana

key: encryptionkey

- name: "ELASTICSEARCH_USERNAME"

value: elastic

- name: "ELASTICSEARCH_PASSWORD"

value: changeme

- We expose Kibana as the NodePort service.

service:

type: NodePort

Kustomize for Kibana

- Define Secrets that is used in kibana.yml

apiVersion: v1

data:

# use base64 format of values of elasticsearch's elastic-certificate.pem and elastic-ca-cert.pem

elastic-certificate.pem: Changeme

elastic-ca-cert.pem: Changme

kind: Secret

metadata:

name: elastic-certificates-pem

namespace: efk

type: Opaque

---

apiVersion: v1

data:

# use base64 format of the value you use for xpack.security.encryptionKey

encryptionkey: Changeme

kind: Secret

metadata:

name: kibana

namespace: efk

type: Opaque

- Optional: create an Ingress resource to point to the Kibana serivce

Install/update the Kibana chart

Change to where your Kustomize directory for Kibana and run

$ helm upgrade -i -n efk kibana elastic/kibana -f YourCustomValueDirForKibana/kibana.yml --post-renderer ./kustomize

Wait till you will see the kibana pod is in “running” status

$ kubectl get po -n efk -l app=kibana

Install Fluentd

Create a custom Helm values file fluentd.yaml

- Specify where to output the logs

elasticsearch:

host: elasticsearch-master

Kustomize for Fluentd

- Create a ConfigMap that includes all Fluentd configuration files as below or you can use your own configuration files.

apiVersion: v1

kind: ConfigMap

metadata:

name: fluentd-config

data:

01_sources.conf: |-

## logs from podman

<source>

@type tail

@id in_tail_container_logs

@label @KUBERNETES

# path /var/log/containers/*.log

path /var/log/containers/*.log

pos_file /var/log/fluentd-containers.log.pos

tag kubernetes.*

read_from_head true

<parse>

@type multi_format

<pattern>

format json

time_key time

time_type string

time_format "%Y-%m-%dT%H:%M:%S.%NZ"

keep_time_key true

</pattern>

<pattern>

format regexp

expression /^(?<time>.+) (?<stream>stdout|stderr)( (.))? (?<log>.*)$/

time_format '%Y-%m-%dT%H:%M:%S.%NZ'

keep_time_key true

</pattern>

</parse>

emit_unmatched_lines true

</source>

02_filters.conf: |-

<label @KUBERNETES>

<match kubernetes.var.log.containers.fluentd**>

@type relabel

@label @FLUENT_LOG

</match>

<match kubernetes.var.log.containers.**_kube-system_**>

@type null

@id ignore_kube_system_logs

</match>

<match kubernetes.var.log.containers.**_efk_**>

@type null

@id ignore_efk_stack_logs

</match>

<filter kubernetes.**>

@type kubernetes_metadata

@id filter_kube_metadata

skip_labels true

skip_container_metadata true

skip_namespace_metadata true

skip_master_url true

</filter>

<match **>

@type relabel

@label @DISPATCH

</match>

</label>

03_dispatch.conf: |-

<label @DISPATCH>

<filter **>

@type prometheus

<metric>

name fluentd_input_status_num_records_total

type counter

desc The total number of incoming records

<labels>

tag ${tag}

hostname ${hostname}

</labels>

</metric>

</filter>

<match **>

@type relabel

@label @OUTPUT

</match>

</label>

04_outputs.conf: |-

<label @OUTPUT>

<match kubernetes.**>

@id detect_exception

@type detect_exceptions

remove_tag_prefix kubernetes

message log

multiline_flush_interval 3

max_bytes 500000

max_lines 1000

</match>

<match **>

@type copy

<store>

@type stdout

</store>

<store>

@type elasticsearch

host "elasticsearch-master"

port 9200

path ""

user elastic

password Changeme

index_name ais.${tag}.%Y%m%d

scheme https

# set to false for self-signed cert

ssl_verify false

# supply El's ca certificat if it's trusted

# ca_file /tmp/elastic-ca-cert.pem

ssl_version TLSv1_2

<buffer tag, time>

# timekey 3600 # 1 hour time slice

timekey 60 # 1 min time slice

timekey_wait 10

</buffer>

</store>

</match>

</label>

Install/update the Fluentd chart

Change to where your Kustomize directory for Fluentd and run

$ helm upgrade -i -n efk fluentd fluent/fluentd --values YourCustomValueDirForFluentd/fluentd.yml --post-renderer ./kustomize

Fluentd is created using Daemonset which ensure a Fluentd pod is created on each worker node. Wait till you will see the fluentd pods are in “running” status

$ kubectl get po -l app.kubernetes.io/name=fluentd -n efk

6 - PostgreSQL Database Tuning

XNAT Database Tuning Settings for PostgreSQL

If XNAT is performing poorly, such as very long delays when adding a Subjects tab, it may be due to the small default Postgres memory configuration.

To change the Postgres memory configuration to better match the available

system memory, add/edit the following settings in

/etc/postgresql/10/opex/postgresql.conf

work_mem = 50MB

maintenance_work_mem = 128MB

effective_cache_size = 256MB

For further information see:

7 - Operational recommendations

Requirements and rationals

Collaboration and knowledge share

Tool selection has been chosen with a security oriented focus but enabling collaboration and sharing of site specific configurations, experiences and recommendations.

Security

A layered security approach with mechanisms to provide access at granular levels either through Access Control Lists (ACLs) or encryption

Automated deployment

- Allow use of Continuous Delivery (CD) pipelines

- Incorporate automated testing principals, such as Canary deployments

Federation of service

Tools

- Git - version control

- GnuPG - Encryption key management

- This can be replaced with a corporate Key Management Service (KMS) if your organisation supports this type of service.

- Secrets OPerationS (SOPS)

- Encryption of secrets to allow configuration to be securely placed in version control.

- SOPS allows full file encryption much like many other tools, however, individual values within certain files can be selectively encrypted. This allows the majority of the file that does not pose a site specific security risk to be available for review and sharing amongst Federated support teams. This should also comply with most security team requirements (please ensure this is the case)

- Can utilise GnuPG keys for encryption but also has the ability to incorporate more Corporate type Key Management Services (KMS) and role based groups (such as AWS AIM accounts)

- git-secrets

- Git enhancement that utilises pattern matching to help prevent sensitive information being submitted to version control by accident.

Warning

Does not replace diligence but can help safe guard against mistakes.

- Git enhancement that utilises pattern matching to help prevent sensitive information being submitted to version control by accident.

8 -

Operational recommendations

The /docs/_operational folder is a dump directory for any documentation related to the day-to-day runnings of AIS released services. This includes, but is not limited to, operational tasks such as:

- Administration tasks

- Automation

- Release management

- Backup and disaster recovery

Jekyll is used to render these documents and any MarkDown files with the appropriate FrontMatter tags will appear in the Operational drop-down menu item.